Learn how blockchain truly works, master key definitions, and uncover what makes smart contracts so "smart." Dive into the fundamentals, gain valuable insights, and start your blockchain journey today!

- AI & ChatGPT

Georgia Weston

- on February 08, 2024

Best Natural Language Processing (NLP) Models

Natural language processing is one of the hottest topics of discussion in the AI landscape. It is an important tool for creating generative AI applications that can create essays and chatbots that can interact personally with human users. As the popularity of ChatGPT soared higher, the attention towards best NLP models gained momentum. Natural language processing focuses on building machines that can interpret and modify natural human language.

It has evolved from the field of computational linguistics and utilizes computer science for understanding principles of language. Natural language processing is an integral aspect of transforming many parts of everyday lives of people. On top of it, the commercial applications of NLP models have invited attention to them. Let us learn more about the most renowned NLP models and how they are different from each other.

What is the Importance of NLP Models?

The search for natural language processing models draws attention to the utility of the models. What is the reason for learning about NLP models? NLP models have become the most noticeable highlight in the world of AI for their different use cases. The common tasks for which NLP models have gained attention include sentiment analysis, machine translation, spam detection, named entity recognition, and grammatical error correction. It can also help in topic modeling, text generation, information retrieval, question answering, and summarization tasks.

All the top NLP models work through identification of the relationship between different components of language, such as the letters, sentences, and words in a text dataset. NLP models utilize different methods for the distinct stages of data preprocessing, extraction of features, and modeling.

The data preprocessing stage helps in improving the performance of the model or turning words and characters into a format comprehensible by the model. Data preprocessing is an integral highlight in the adoption of data-centric AI. Some of the notable techniques for data preprocessing include sentence segmentation, stemming and lemmatization, tokenization, and stop-word removal.

The feature extraction stage focuses on features or numbers that describe the relationship between documents and the text they contain. Some of the conventional techniques for feature extraction include bag-of-words, generic feature engineering, and TF-IDF. Other new techniques for feature extraction in popular NLP models include GLoVE, Word2Vec, and learning the important features during training process of neural networks.

The final stage of modeling explains how NLP models are created in the first place. Once you have preprocessed data, you can enter it into an NLP architecture which helps in modeling the data for accomplishing the desired tasks. For example, numerical features can serve as inputs for different models. You can also find deep neural networks and language models as the most notable examples of modeling.

Want to understand the importance of ethics in AI, ethical frameworks, principles, and challenges? Enroll now in the Ethics Of Artificial Intelligence (AI) Course

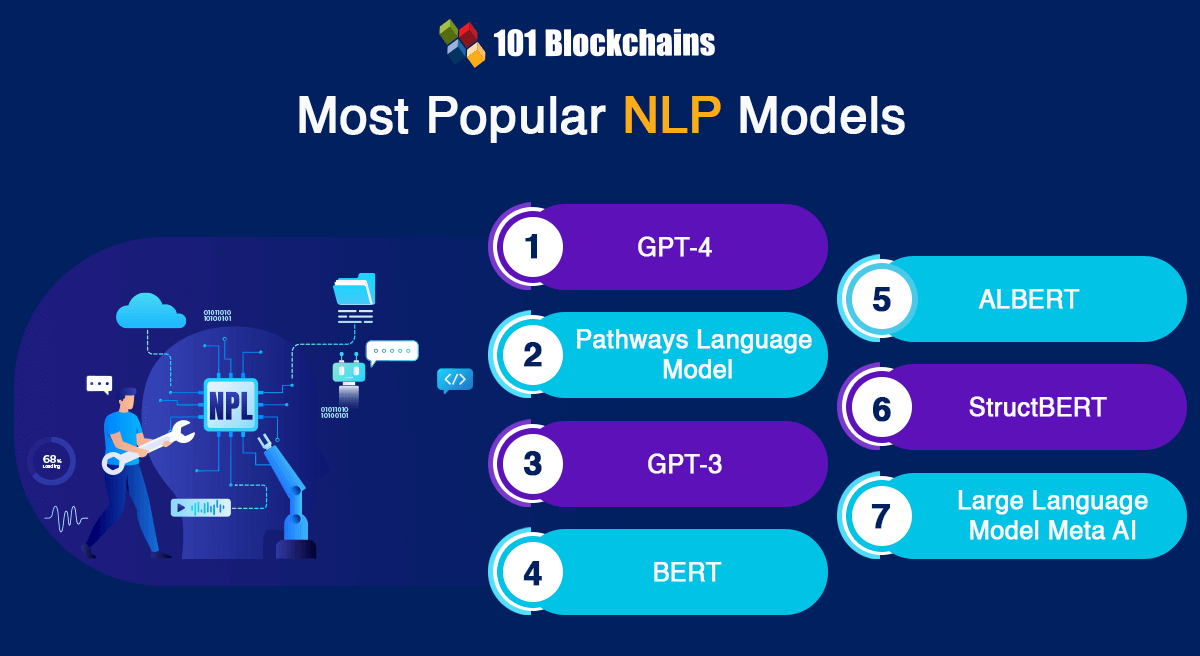

Most Popular Natural Language Processing Models

The arrival of pre-trained language models and transfer learning in the domain of NLP created new benchmarks for language interpretation and generation. Latest research advancements in NLP models include the arrival of transfer learning and the application of transformers to different types of downstream NLP tasks. However, curiosity regarding questions such as ‘Which NLP model gives the best accuracy?’ would lead you towards some of the popular mentions.

You may come across conflicting views in the NLP community about the value of massive pre-trained language models. On the other hand, the latest advancements in the domain of NLP have been driven by massive improvements in computing capacity alongside discovery of new ways for optimizing the models to achieve high performance. Here is an outline of the most renowned or commonly used NLP models that you should watch out for in the AI landscape.

-

Generative Pre-Trained Transformer 4

Generative Pre-trained Transformer 4 or GPT-4 is the most popular NLP model in the market right now. As a matter of fact, it tops the NLP models list due to the popularity of ChatGPT. If you have used ChatGPT Plus, then you have used GPT-4. It is a large language model created by OpenAI, and its multimodal nature ensures that it can take images and text as input. Therefore, GPT-4 is considerably more versatile than the previous GPT models, which could only take text inputs.

During the development process, GPT-4 was trained to anticipate the next content. In addition, it has to go through fine-tuning by leveraging feedback from humans and AI systems. It served as the ideal example of maintaining conformance to human values and specified policies for AI use.

GPT-4 has played a crucial role in enhancing the capabilities of ChatGPT. On the other hand, it still experiences some challenges that were present in the previous models. The key advantages of GPT-4 point to the fact that it has 175 billion parameters, which makes it 10 times bigger than GPT-3.5, the model behind ChatGPT functionalities.

Enroll in our Certified ChatGPT Professional Certification Course to master real-world use cases with hands-on training. Gain practical skills, enhance your AI expertise, and unlock the potential of ChatGPT in various professional settings.

-

Pathways Language Model

The next addition among best NLP models is the Pathways Language Model or PaLM. One of the most striking highlights of the PaLM NLP model is that it has been created by the Google Research team. It represents a major improvement in the domain of language technology, which has almost 540 billion parameters.

The training of PaLM model involves efficient computing systems known as Pathways, which help in ensuring training across different processors. One of the most crucial highlights of PaLM model is the scalability of its training process. The training process for PaLM NLP model involved 6144 TPU v4 chips, which makes it one of the most massive TPU-based training models.

PaLM is one of the popular NLP models with the potential to revolutionize the NLP landscape. It used a mix of different sources, including datasets in English and many other languages. The datasets used for training PaLM model include books, conversations, code from Github, web documents, and Wikipedia content.

With such an extensive training dataset, PaLM model serves excellent performance in language tasks such as sentence completion and question answering. On the other hand, it also excels in reasoning and can help in handling complex math problems alongside providing clear explanations. In terms of coding, PaLM is similar to specialized models, albeit with the requirement of less code for learning.

-

GPT-3

GPT-3 is a transformer-based NLP model that could perform question-answering tasks, translation and composing poetry. It is also one of the top NLP models that can work on tasks involving reasoning, like unscrambling words. On top of it, recent advancements in GPT-3 offer the flexibility for writing news and generating codes. GPT-3 has the capability for managing statistical dependencies between different words.

The training data for GPT-3 included more than 175 billion parameters alongside 45 TB of text sourced from the internet. This feature makes GPT-3 one of the largest pre-trained NLP models. On top of it, another interesting feature of GPT-3 is that it does not need fine-tuning to perform downstream tasks. GPT-3 utilizes the ‘text in, text out’ API to help developers reprogram the model by using relevant instructions.

Want to learn about the fundamentals of AI and Fintech, Enroll now in AI And Fintech Masterclass

-

Bidirectional Encoder Representations from Transformers

The Bidirectional Encoder Representations from Transformers or BERT is another promising entry in this NLP models list for its unique features. BERT has been created by Google as a technique to ensure NLP pre-training. It utilizes the transformer model or a new neural network architecture, which leverages the self-attention mechanism for understanding natural language.

BERT was created to resolve the problems associated with neural machine translation or sequence transduction. Therefore, it could work effectively for tasks that transform the input sequence into output sequence. For example, text-to-speech conversion or speech recognition are some of the notable use cases of BERT model.

You can find a reasonable answer to “Which NLP model gives the best accuracy?” by diving into details of transformers. The transformer model utilizes two different mechanisms: an encoder and a decoder. The encoder works on reading the text input, while the decoder focuses on producing predictions for the task. It is important to note that BERT focuses on generating an effective language model and uses the encoder mechanism only.

BERT model has also proved its effectiveness in performing almost 11 NLP tasks. The training data of BERT includes 2500 million words from Wikipedia and 800 million words from the BookCorpus training dataset. One of the primary reasons for accuracy in responses of BERT is Google Search. In addition, other Google applications, including Google Docs, also use BERT for accurate text prediction.

-

ALBERT

Pre-trained language models are one of the prominent highlights in the domain of natural language processing. You can notice that pre-trained natural language processing models support improvements in performance for downstream tasks. However, an increase in model size can create concerns such as limitations of GPU/TPU memory and extended training times. Therefore, Google introduced a lighter and more optimized version of BERT model.

The new model, or ALBERT, featured two distinct techniques for parameter reduction. The two techniques used in ALBERT NLP model include factorized embedding parameterization and cross-layer parameter sharing. Factorized embedding parameterization involves isolation of the size of hidden layers from size of vocabulary embedding.

On the other hand, cross-layer parameter sharing ensures limitations on growth of a number of parameters alongside the depth of the network. The techniques for parameter reduction help in reducing memory consumption alongside increasing the model’s training speed. On top of it, ALBERT also offers a self-supervised loss in the case of sentence order prediction, which is a prominent setback in BERT for inter-sentence coherence.

Become a master of generative AI applications by developing expert-level skills in prompt engineering with Prompt Engineer Career Path

-

StructBERT

The attention towards BERT has been gaining momentum due to its effectiveness in natural language understanding or NLU. In addition, it has successfully achieved impressive accuracy for different NLP tasks, such as semantic textual similarity, question answering, and sentiment classification. While BERT is one of the best NLP models, it also has scope for more improvement. Interestingly, BERT gained some extensions and transformed into StructBERT through incorporation of language structures in the pre-training stages.

StructBERT relies on structural pre-training for offering effective empirical results on different downstream tasks. For example, it can improve the score on the GLUE benchmark for comparison with other published models. In addition, it can also improve accuracy and performance for question-answering tasks. Just like many other pre-trained NLP models, StructBERT can support businesses with different NLP tasks, such as document summarization, question answering, and sentiment analysis.

-

Large Language Model Meta AI

The LLM of Meta or Facebook or Large Language Model Meta AI arrived in the NLP ecosystem in 2023. Also known as Llama, the large language model of Meta serves as an advanced language model. As a matter of fact, it might become one of the most popular NLP models soon, with almost 70 billion parameters. In the initial stages, only approved developers and researchers could access the Llama model. However, it has become an open source NLP model now, which allows a broader community to utilize and explore the capabilities of Llama.

One of the important details about Llama is the adaptability of the model. You can find it in different sizes, including the smaller versions which utilize lesser computing power. With such flexibility, you can notice that Llama offers better accessibility for practical use cases and testing. Llama also offers open gates for trying out new experiments.

The most interesting thing about Llama is that it was launched to the public unintentionally without any planned event. The sudden arrival of Llama, with doors open for experimentation, led to the creation of new and related models like Orca. New models based on Llama used its distinct capabilities. For example, Orca utilizes the comprehensive linguistic capabilities associated with Llama.

Enroll now in the Mastering Generative AI with LLMs Course to discover the different ways of using generative AI models to solve real-world problems.

Conclusion

The outline of top NLP models showcases some of the most promising entries in the market right now. However, the interesting thing about NLP is that you can find multiple models tailored for unique applications with different advantages. The growth in use of NLP for business use cases and activities in everyday life has created curiosity about NLP models.

Candidates preparing for jobs in AI would have to learn about new and existing NLP models and how they work. Natural language processing is an integral aspect of AI, and the continuously growing adoption of AI also offers better prospects for popularity of NLP models. Learn more about NLP models and their components right now.