Learn how blockchain truly works, master key definitions, and uncover what makes smart contracts so "smart." Dive into the fundamentals, gain valuable insights, and start your blockchain journey today!

- Comparisons

James Howell

- on March 29, 2024

Transfer Learning vs. Fine Tuning LLMs: Key Differences

The two most prominent techniques that define the functionalities of large language models or LLMs include fine-tuning and transfer learning. Each technique is useful for pre-trained large language models. Before diving into the transfer learning vs fine-tuning debate, it is important to note that both approaches help users leverage the knowledge in pre-trained models.

Interestingly, you must note that transfer learning is also a type of fine-tuning, and the best way to explain it is to consider it full fine-tuning. Even if they are interconnected, transfer learning and fine-tuning serve distinct objectives for training fundamental LLMs. Let us learn more about the differences between them with detailed impression of the implications of both techniques.

Definition of Transfer Learning

The best way to find answers to “What is the difference between transfer learning and fine-tuning?” involves learning about the two techniques. Transfer learning is an important concept in the use of large language models or LLMs. It involves the use of pre-trained LLMs on new tasks. Transfer learning leverages the existing pre-trained LLMs from LLM families such as GPT, BERT, and others who were trained for a specific task.

For example, BERT is tailored for Natural Language Understanding, while GPT is created for Natural Language Generation. Transfer learning takes these LLMs and tailors them for a different target task with prominent similarities. The target task can be a domain-specific variation of the source task.

The primary objective in transfer learning revolves around using the knowledge obtained from the source task to achieve enhanced performance on target tasks. It is useful in scenarios where you have limited labeled data to achieve the target task. You must also note that you don’t have to pre-train the LLM from scratch.

You can dive deeper into the transfer learning vs. fine-tuning comparison by accounting for the training scope in transfer learning. In transfer learning, only the latter layers, including the parameters of the model, are selected for training. On the other hand, the early layers and the related parameters are frozen as they represent universal features such as textures and edges.

The training method used in transfer learning is also known as parameter-efficient fine-tuning or PEFT. It is important to note that PEFT techniques freeze almost all the parameters of the pre-trained parameter. On the other hand, the techniques only implement fine-tuning for a restricted set of parameters. You must also remember that transfer learning involves a limited number of strategies, such as PEFT methods.

Enroll now in the Mastering Generative AI with LLMs Course to discover the different ways of using generative AI models to solve real-world problems.

Working Mechanism of Transfer Learning

The most important highlight required to uncover insights on the fine-tuning vs. transfer learning debate refers to the working of transfer learning. You can understand the working mechanism of transfer learning in three distinct stages. The first stage in the working of transfer learning involves identification of the pre-trained LLM. You should choose a pre-trained model that has used a large dataset for training to address tasks in a general domain. For example, a BERT model.

In the next stage, you have to determine the target task for which you want to implement transfer learning on the LLM. Make sure that the task aligns with the source task in some form. For example, it could be about classification of contract documents or resumes for recruiters. The final stage of training LLMs through transfer learning involves performing domain adaptation. You can use the pre-trained model as an initial point for target task. According to the complexity of the problem, you might have to freeze some layers of model or ensure that they don’t have any updates to associated parameters.

The working mechanism of transfer learning provides a clear impression of the advantages you can find with it. You can understand the fine-tuning transfer learning comparisons easily by considering the benefits of transfer learning. Transfer learning offers promising advantages such as enhancements in efficiency, performance, and speed.

You can notice how transfer learning reduces the requirement of extensive data in the target task, thereby improving efficiency. At the same time, it also ensures a reduction of training time as you work with pre-trained models. Most importantly, transfer learning can help achieve better performance in use cases where the target task can access limited labeled data.

Identify new ways to leverage the full potential of generative AI in business use cases and become an expert in generative AI technologies with Generative AI Skill Path

Definition of Fine-Tuning

As you move further in exploring the difference between transfer learning and fine-tuning, it is important to learn about the next player in the game. Fine-tuning or full fine-tuning has emerged as a powerful tool in the domain of LLM training. Full fine-tuning focuses on using pre-trained models that have been trained using large datasets. It focuses on tailoring the models to work on a specific task through continuation of the training process on smaller, task-centric datasets.

Working Mechanism of Fine-Tuning

The high-level overview of the fine-tuning for LLMs involves updating all model parameters using supervised learning. You can find better clarity in responses to “What is the difference between transfer learning and fine-tuning?” by familiarizing yourself with how fine-tuning works.

The first step in the process of fine-tuning LLMs begins with the identification of a pre-trained LLM. In the next step, you have to work on determining the task. The final stage in the process of fine-tuning involves adjusting weights of pre-trained model to achieve desired performance in the new task.

Full fine-tuning depends on a massive amount of computational resources, such as GPU RAM. It can have a significant influence on the overall computing budget. Transfer learning, or PEFT, helps reduce computing and memory costs with the frozen foundation model parameters. PEFT techniques rely on fine-tuning a limited assortment of new model parameters, thereby offering better efficiency.

Take your first step towards learning about artificial intelligence through AI Flashcards

How is Transfer Learning Different from Fine Tuning?

Large Language Models are one of the focal elements in the continuously expanding artificial intelligence ecosystem. At the same time, it is also important to note that LLMs have been evolving, and fundamental research into their potential provides the foundation for new LLM use cases.

The growing emphasis on transfer learning vs. fine-tuning comparisons showcases how the methods for tailoring LLMs to achieve specific tasks are major highlights for the AI industry. Here is an in-depth comparison between transfer learning and fine-tuning to find out which approach is the best for LLMs.

-

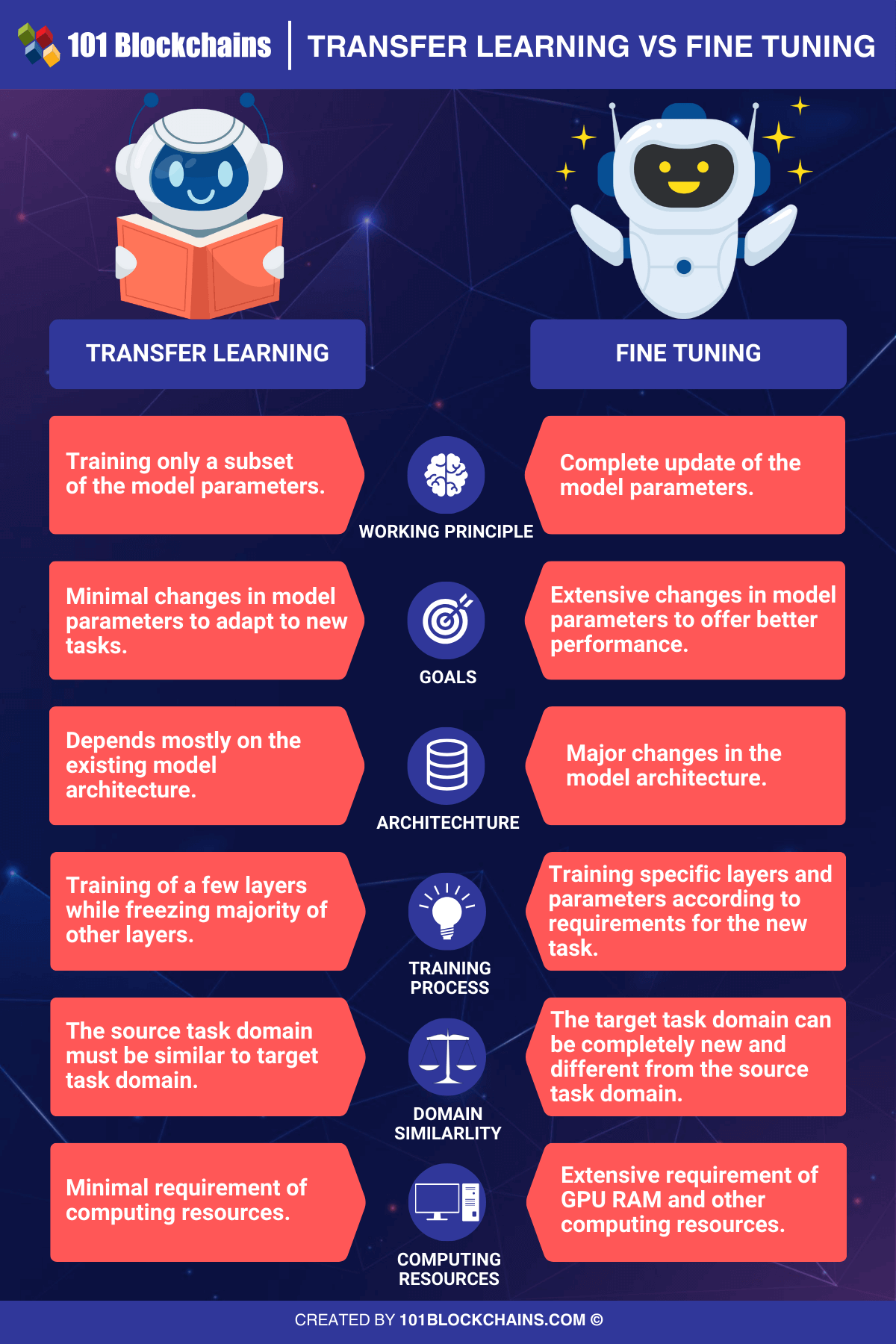

Working Principle

The foremost factor in a comparison between transfer learning and fine-tuning is the working principle. Transfer learning involves training a small subset of the model parameters or a limited number of task-specific layers. The most noticeable theme in every fine-tuning vs. transfer learning debate is the way transfer learning involves freezing most of the model parameters. The most popular strategy for transfer learning is the PEFT technique.

Full fine-tuning works on a completely opposite principle by updating all parameters of the pre-trained model over the course of the training process. How? The weights of each layer in the model go through modifications on the basis of new training data. Fine-tuning brings crucial modifications in the behavior of a model and its performance, with specific emphasis on accuracy. The process ensures that the LLM precisely adapts to the specific dataset or task, albeit with consumption of more computing resources.

-

Goals

The difference between transfer learning and fine-tuning is clearly visible in their goals. The objective of transfer learning emphasizes adapting the pre-trained model to a specific task without major changes in model parameters. With such an approach, transfer learning helps maintain a balance between retaining the knowledge gained during pre-training and adapting to the new task. It focuses on minimal task-specific adjustments to get the job done.

The objective of fine-tuning emphasizes changing the complete pre-trained model to adapt to new datasets or tasks. The primary goals of fine-tuning LLMs revolve around achieving maximum performance and accuracy for achieving a specific task.

Want to understand the importance of ethics in AI, ethical frameworks, principles, and challenges? Enroll now in the Ethics Of Artificial Intelligence (AI) Course

-

Architecture

You can also differentiate fine-tuning from transfer learning by learning how they affect model architecture. The answers to “What is the difference between transfer learning and fine-tuning?” emphasize the ways in which transfer learning works only on the existing architecture. It involves freezing most of the model parameters and fine-tuning only a small set of parameters.

Full fine-tuning changes the parameters of the LLM completely to adapt to the new task. As a result, it would involve a complete update of the model architecture according to emerging requirements.

-

Training Process

The differences between fine-tuning and transfer learning also focus on the training process as a crucial parameter. Transfer learning involves training only a new top layer while maintaining other layers in a fixed state. The fine-tuning transfer learning debate frequently draws attention to the freezing of model parameters in transfer learning. Only in certain cases does the number of newly trained parameters account for only 1% to 2% of the weights of the original LLM.

The training process of fine-tuning LLMs emphasizes the modification of specific layers and parameters for carrying out the new tasks. It involves updating the weights of any parameter according to the emerging utility of LLMs.

Want to learn about ChatGPT and other AI use cases? Enroll now in the ChatGPT Fundamentals Course

-

Domain Similarity

Another factor for comparing transfer learning with fine-tuning is the similarity between source task and the target task domain. Transfer learning is the ideal pick for scenarios when the new task domain is almost similar to the original or source task domain. It involves a small new dataset that utilizes the knowledge of the pre-trained model on larger datasets.

Fine-tuning is considered more effective in scenarios where the new dataset is significantly large, as it helps the model learn specific features required for the new task. In addition, the new dataset must have a direct connection with the original dataset.

-

Computing Resources

The discussions about the transfer learning vs. fine-tuning comparison draw attention to the requirement of computing resources. Transfer learning involves limited use of computational resources as it is a resource-efficient approach. The working principle of transfer learning focuses on updating only a small portion of the LLM.

It needs limited processing power and memory, thereby offering the assurance of faster training time. Therefore, transfer learning is the ideal recommendation for scenarios where you have to train LLMs with limited computational resources and faster experimentation.

Fine-tuning works by updating all model parameters. As a result, it requires more computational resources and consumes more time. Fine-tuning utilizes more processing power and memory alongside increasing the training times, which increases for larger models. Full fine-tuning generally needs a large amount of GPU RAM, which piles up the costs for the process of training LLMs.

Here’s is an comparison table on transfer learning vs fine tuning.

Final Words

The comparison between fine-tuning and transfer learning helps in uncovering the significance of the two training approaches. You have to find important highlights in the fine-tuning vs. transfer learning comparison, as they are crucial tools for optimizing LLMs. Transfer learning and fine-tuning can help in tailoring large language models to achieve specific tasks, albeit with crucial differences. An in-depth understanding of the differences between fine-tuning and transfer learning can help identify which method suits specific use cases. Learn more about large language models and the implications of fine-tuning and transfer learning for LLMs right now.