Learn how blockchain truly works, master key definitions, and uncover what makes smart contracts so "smart." Dive into the fundamentals, gain valuable insights, and start your blockchain journey today!

- AI & ChatGPT

Georgia Weston

- on January 04, 2024

Transfer Learning – A Guide for Deep Learning

Deep learning is an important discipline in the domain of machine learning. It works by simulating human intelligence through neural networks. The neural networks are developed with nodes that are similar to neurons that are the fundamental units of the human brain. At this point of time, questions like ‘What is transfer learning?’ also invite attention to the emerging trends in the field of machine learning.

Is it different from machine learning and deep learning? What does transfer learning help you achieve? Neural networks help in developing deep learning models and use unstructured data from which the deep learning models can extract features by iterative training. However, the iterative process of training a neural network on large datasets could take a lot of time.

The first thing you would learn in a transfer learning tutorial is the fact that transfer learning can help reduce the time required for training neural networks. It is a promising technique for natural language processing and image classification tasks. Let us learn more about transfer learning and its significance in deep learning.

Definition of Transfer Learning

The best way to understand the importance of transfer learning in deep learning focuses on the definition of transfer learning. In simple words, it is the process of reusing a pre-trained model for solving a new problem. As of now, it is one of the most popular terms in the domain of deep learning as it could help in training deep neural networks with little data. Transfer learning is important in the field of data science as most real-world problems do not have numerous labeled data points for training complex models.

The basic transfer learning example would show that knowledge about a trained machine learning model could be applied to a related issue. For example, you can have a simple classifier for predicting whether an image includes a backpack. Now, you can use the knowledge gained by the model during the training process to recognize other objects. The process of transfer learning involves exploiting the knowledge gained in one task to improve generalization for another task. In other words, transfer learning involves transferring weights learned by a network at ‘Task A’ to a new task known as ‘Task B.’

The general idea behind transfer learning models is the use of knowledge that a model has learned from a particular task with lots of labeled training data. The model uses the knowledge for a new task that does not have significant data. Rather than implementing the learning process from scratch, you can use the patterns learned through solving related tasks. Transfer learning is primarily used in natural language processing and computer vision tasks, which require more computational power.

Excited to learn about ChatGPT and other AI use cases? Enroll now in ChatGPT Fundamentals Course

Variants of Transfer Learning

The definition of transfer learning creates curiosity about the types of transfer learning and how they differ from each other. The variants of transfer learning are different on the grounds of three distinctive factors. First of all, you must think about what should be transferred and the approaches and timing for transferring training data. The difference between different variants of transfer learning emerges from the fact that the source dataset and target dataset in the transfer learning settings could vary for different domains or different tasks.

The top examples in a transfer learning tutorial would point to inductive learning, unsupervised learning, and transductive learning. Inductive learning focuses on different tasks, irrespective of the similarities between target and source domains. Unsupervised learning is the recommended transfer learning approach when you don’t have labeled data for training. Transductive learning would be useful in situations where the tasks are almost the same, albeit with differences in the marginal probability distributions or feature spaces between domains.

Take your first step towards learning about artificial intelligence through AI Flashcards

Working Mechanism of Transfer Learning

The next crucial highlight in the domain of transfer learning would point to the working mechanism. In the domain of computer vision, neural networks work by detecting edges in the first layers, followed by shapes in middle layer and task-specific features in the last layers. On the other hand, guides on “What is transfer learning?” show that only the first and middle layers are used. You would have to work on retraining the latter layers, which would help in leveraging the labeled data of the task that it was trained for.

You can assume an example of a model that could help in recognizing a backpack in an image, which would be used for identifying sunglasses. In the first layers, the model has already learned the approach for recognizing objects. Therefore, you would have to focus only on retraining the last layers so that the model could recognize the aspects that would distinguish sunglasses from other objects.

Transfer learning works by transferring as much knowledge as you can from the previous task that the model was trained for to the new task. The knowledge could be available in different forms, according to the problem and the concerned data. For example, the knowledge could describe the approaches for composing the model, which could help in identifying new objects with ease.

Learn the fundamentals of AI applications in business use cases with AI For Business Course

What are the Reasons for Using Transfer Learning?

The importance of transfer learning in deep learning draws attention to the different benefits. However, the primary advantages of transfer learning points at saving training time and better performance with neural networks. In addition, you should also notice that you would not need a lot of data.

Generally, you would need a lot of data for training a neural network. However, you could not access the data in all cases. Transfer learning helps a lot in such cases. You can use transfer learning for building a formidable machine learning model with comparatively fewer data as the model has been pre-trained.

Transfer learning is useful for natural language processing as you would need expert knowledge for creating large labeled datasets. In addition, it can also help in reducing the training time, as you could need data or weeks for training deep neural networks from scratch for complex tasks. The advantages of transfer learning models also point to the assurance of achieving accuracy. Most important of all, transfer learning is useful in cases where you don’t have the computing resources required for training a model.

Unlock your potential in Artificial Intelligence with the Certified AI Professional (CAIP)™ Certification. Elevate your career with expert-led training and gain the skills needed to thrive in today’s AI-driven world.

Where Can’t You Use Transfer Learning?

The most critical aspect in the field of transfer learning points to the scenarios where you can’t use it. For example, transfer learning is not useful for situations where high-level features learned in the bottom layers are not useful for differentiating the classes in the problem. Pre-trained models could show exceptional results in identifying a door, albeit with difficulties in identifying whether it is open or closed. Such a transfer learning example shows that you can use the low-level features rather than the high-level features. You would have to retrain other layers of the model alongside using features from previous layers.

In the case of dissimilar datasets, the transfer of features is significantly poor. You could also come across situations where you have to remove some layers from pre-trained models. The impact of different types of transfer learning shows that it would not work in use cases where you have to remove some layers. Removing layers could reduce the number of trainable parameters, thereby leading to overfitting. On top of it, identifying the ideal number of layers that you can remove without overfitting could be a time-consuming and challenging process.

Want to learn about the fundamentals of AI and Fintech? Enroll now in the AI And Fintech Masterclass

Where Should You Implement Transfer Learning?

In the domain of machine learning, you would have to experience challenges in forming generally applicable rules. However, the applications of transfer learning in deep learning would have to abide by certain guidelines. Here are the recommended scenarios where you can use transfer learning.

- You don’t have an adequate amount of labeled training data for training the network from scratch.

- If the first task and the new task have the same input, then you can use transfer learning.

- You have a network that has been pre-trained for a similar task, which is generally trained on massive volumes of data.

The different types of scenarios where you can use transfer learning provide a better explanation of usability of transfer learning. In addition, you should also consider situations where the original model uses an open-source library like TensorFlow for training. In such cases, you could restore the model and then retrain some of the layers to achieve your desired tasks.

At the same time, the transfer learning example must also focus on the fact that transfer learning is useful only if the features of learning the first task are general in nature. On top of it, you should also note that the input of the model should be the same size as the data used for training it. If you don’t have the same, then you can introduce a pre-processing step for resizing the input to the required size.

Enroll in our Certified ChatGPT Professional Certification Course to master real-world use cases with hands-on training. Gain practical skills, enhance your AI expertise, and unlock the potential of ChatGPT in various professional settings.

How Can You Implement Transfer Learning?

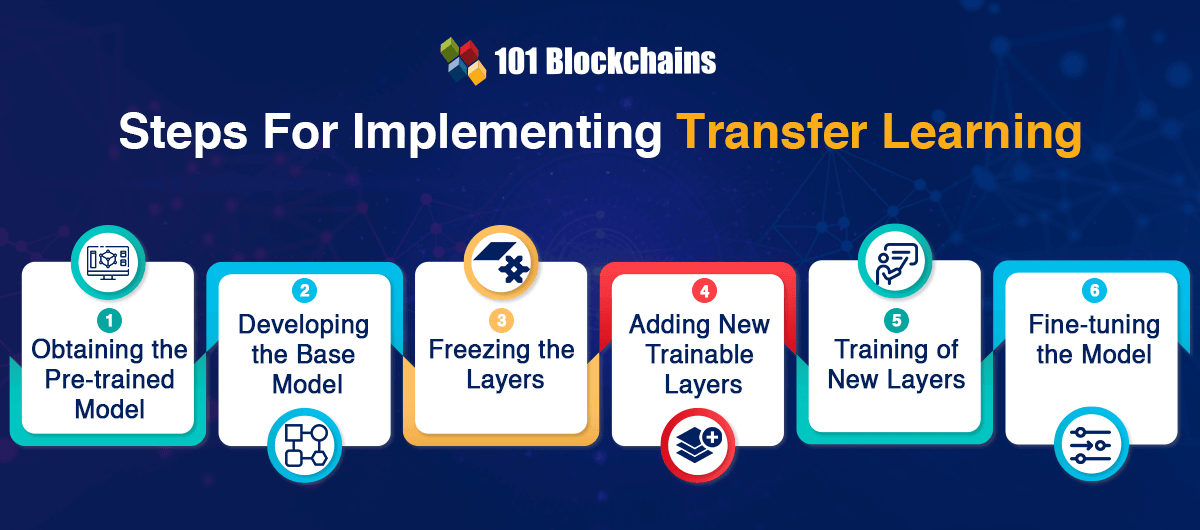

You might have some doubts about implementing transfer learning after learning about its advantages. After identifying the right use cases for transfer learning, you can learn about the important steps for implementing transfer learning. You can use transfer learning by using the following steps.

-

Obtaining the Pre-trained Model

The first step in the transfer learning process focuses on returning to the fundamentals of transfer learning. You learn the different answers to “What is transfer learning?” and find its different advantages. What is the basic premise of transfer learning? You take a pre-trained model and retrain it for a new, similar task.

Therefore, you can begin the transfer learning process by choosing a pre-trained model suited to your problem. You can find different types of pre-trained models for transfer learning applications, such as Keras pre-trained models, pre-trained word embeddings, Hugging Face, and TensorFlow Hub.

-

Developing the Base Model

You can start instantiation of the base model by using architectures, such as Xception or ResNet. In addition, you could also download pre-trained weights for the base model. Without the weights, you would have to leverage the architecture for training the model from ground zero.

It is also important to note that such types of transfer learning processes would require the base model to have more units in the final output layer than the required amount. In addition, you must also remove final output layer and then add a final output layer that showcases compatibility with your concerns problem.

-

Freezing the Layers

The steps in the transfer learning process must also focus on freezing the layers in the pre-trained model. It ensures that the weights in the frozen layers do not go through re-initialization. Without freezing, you are likely to lose all the previous knowledge, and you would have to train the model from scratch.

-

Adding New Trainable Layers

In the next step, you should introduce new trainable layers that could convert old features into predictions for new datasets. It is important as the pre-trained model does not need the final output layer for loading.

-

Training of New Layers

As you work with transfer learning models, you would have to train new layers. You must know that the final output of the pre-trained model would be different from the desired output. You have to add new dense layers. Most important of all, you would need a final layer featuring units that correspond to the number of desired outputs.

-

Fine-tuning the Model

The final stage in the transfer learning process involves fine-tuning the model and improving its performance. You can implement fine-tuning by unfreezing the base model and using a complete dataset for training the entire model. It is important to ensure a lower learning rate that could improve the performance of the model without overfitting.

Become a master of generative AI applications by developing expert-level skills in prompt engineering with Prompt Engineer Career Path

Conclusion

The applications of transfer learning in deep learning could support improvements in a wide range of natural language processing tasks. One of the most interesting aspects of transfer learning is the fact that you can save a lot of time. In addition, you can create new deep-learning models with better performance and accuracy for solving complex tasks. You could access pre-trained models from different sources and create your own deep-learning models with minimal effort. Learn more about the fundamentals of machine learning and deep learning to understand the real-world use cases for transfer learning.