Learn how blockchain truly works, master key definitions, and uncover what makes smart contracts so "smart." Dive into the fundamentals, gain valuable insights, and start your blockchain journey today!

- AI & ChatGPT

Georgia Weston

- on October 27, 2023

TensorFlow vs PyTorch – Key Differences

Deep learning is one of the most popular subsets in the domain of artificial intelligence right now. However, the questions and concerns about the implications of deep learning would have a major impact on the adoption of deep learning in real-world applications. The TensorFlow vs PyTorch debate reflects the continuously increasing emphasis on adoption of deep learning.

The two notable deep learning frameworks have a distinct set of advantages and limitations. Deep learning focuses on development of computer systems with human intelligence for solving real-world issues through artificial neural networks. Big tech companies such as Facebook and Google have introduced multiple frameworks for easier development and training and neural networks.

Artificial Neural Networks, or ANNs, have proved their capabilities as effective choices for supervised learning. On the other hand, manual programming for an ANN could be an uphill task. Therefore, deep learning frameworks such as TensorFlow and PyTorch have emerged as promising solutions for simplifying development and utilization of ANNs. At the same time, many other deep learning frameworks have also made their mark in the domain of AI. Let us focus on the differences between PyTorch and TensorFlow to find out the better alternative between them in the following post.

Overview of TensorFlow

Before you explore the difference between TensorFlow and PyTorch, it is important to learn about the fundamentals of both frameworks. Apart from their definitions, you must also identify the advantages and setbacks of each framework for a better understanding of the differences between them.

TensorFlow is a popular machine-learning framework developed by Google. It was converted to an end-to-end open-source platform, and the TensorFlow software library took the place of DistBelief framework of Google. Interestingly, the TensorFlow software library is compatible with almost all execution platforms, such as mobile, CPU, TPU, GPU, and others.

The TensorFlow framework also includes a math library featuring trigonometric functions and basic arithmetic operators. In addition, the TensorFlow Lite implementation of the framework has been tailored specifically for edge-based machine learning. TensorFlow Lite could run different lightweight algorithms across edge devices with resource limitations, such as microcontrollers and smartphones.

Take your first step towards learning about artificial intelligence through AI Flashcards

Overview of PyTorch

The review of a PyTorch vs TensorFlow comparison would be incomplete without understanding the origins of PyTorch. It arrived in 2016, and prior to PyTorch, most of the deep learning frameworks emphasized usability or speed. PyTorch emerged as a promising tool for deep learning research with a combination of usability and performance.

The notable advantages of PyTorch are associated with the programming style, which is similar to Python. Therefore, PyTorch supports easier debugging alongside ensuring consistency with different renowned scientific computing libraries. PyTorch can address the desired functionalities while facilitating efficiency and support for hardware accelerators.

PyTorch is a popular Python library that ensures faster execution of dynamic tensor computation tasks with GPU acceleration and automatic differentiation. The deep learning framework could also stand out in the PyTorch vs TensorFlow speed comparison. It offers better speed than most of the general libraries suited for deep learning. Majority of the core of PyTorch has been scripted in C++, thereby suggesting lower overhead costs in comparison to other frameworks. PyTorch is a reliable choice for shortening the time required for designing, training, and testing neural networks.

Want to develop the skill in ChatGPT to familiarize yourself with the AI language model? Enroll now in ChatGPT Fundamentals Course

Advantages and Limitations of TensorFlow

The first stage in the comparison between TensorFlow and PyTorch is the outline of the advantages and limitations of each tool. TensorFlow offers crucial advantages for deep learning, such as visualization features for training, open-source nature, and easier mobile support. In addition, it also offers a production-ready framework with the support of TensorFlow serving. On top of it, you can access TensorFlow functionalities through simple in-built high-level API. TensorFlow also serves the benefits of community support alongside extensive documentation.

While TensorFlow presents a long list of advantages in a TensorFlow vs PyTorch debate, it also features certain limitations. The setbacks with TensorFlow include the complicated debugging method and the static graph. Furthermore, it does not support faster modifications.

Advantages and Limitations of PyTorch

The advantages and limitations of PyTorch are also an important highlight in the discussions about the differences between TensorFlow and PyTorch. First of all, PyTorch offers the flexibility of Python-like programming alongside dynamic graphs. PyTorch also offers the benefit of easier and faster editing. PyTorch is similar to TensorFlow in terms of community support, extensive documentation, and open-source nature. On top of it, multiple projects utilize PyTorch, thereby signaling mainstream adoption.

The PyTorch vs TensorFlow difference table must also account for the limitations of PyTorch. It needs an API server for production, unlike TensorFlow, which is production-ready. Another limitation of PyTorch is the requirement of a third party for visualization.

Excited to learn the fundamentals of AI applications in business? Enroll now in the AI For Business Course

What Are The Differences Between TensorFlow and PyTorch?

The overview of PyTorch and TensorFlow, alongside the outline of their advantages and limitations, provides a brief glimpse of the comparison between them. However, you need to dive deeper into other aspects to compare the two deep learning frameworks.

Here is a detailed outline of the prominent differences between TensorFlow and PyTorch –

-

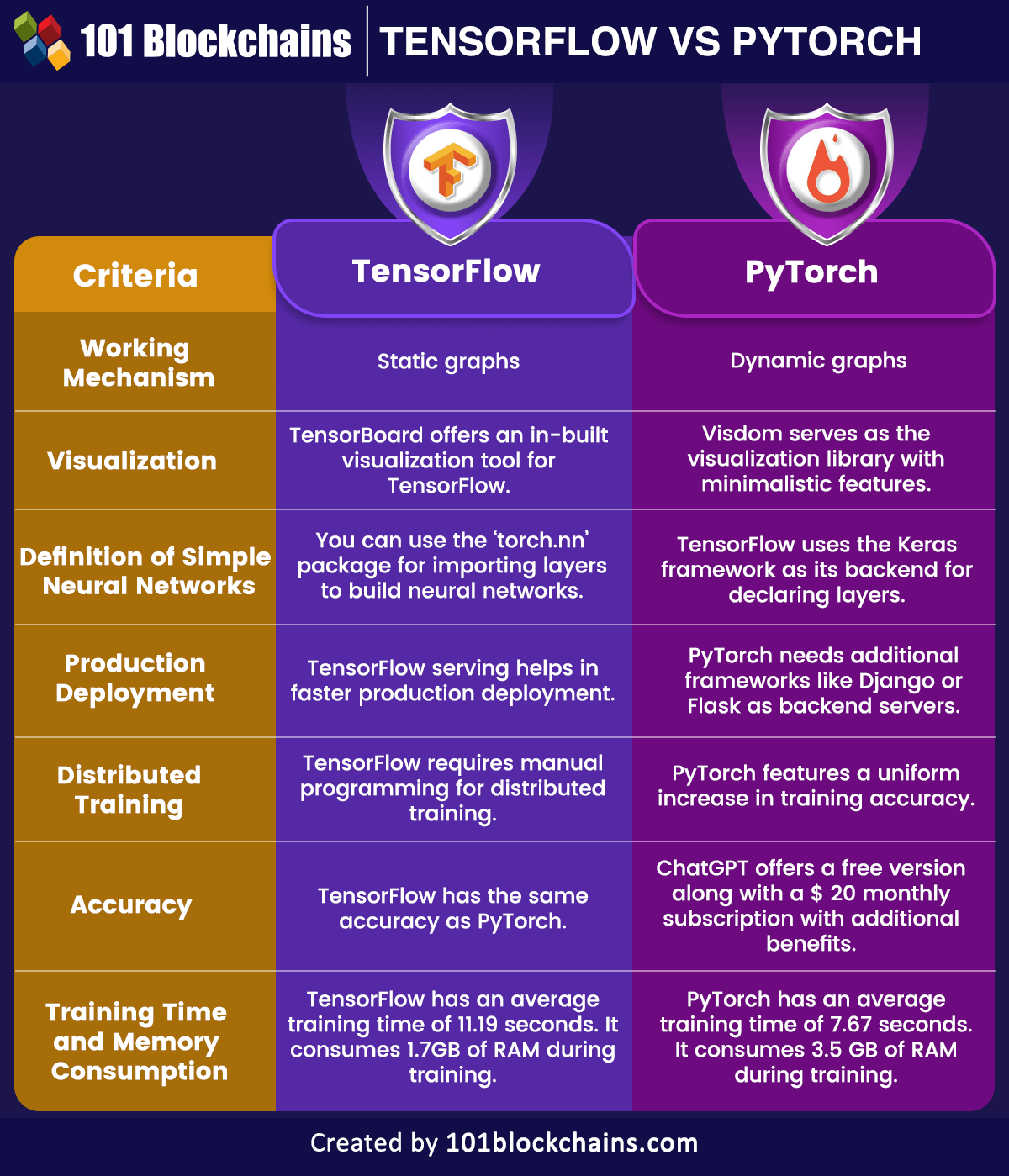

Working Mechanism

The first point of comparison in the difference between TensorFlow and PyTorch refers to their working mechanism. TensorFlow is a framework including two core components such as a library and a computational graph. The library helps in definition of computation graphs alongside the runtime for execution of such graphs on different hardware platforms.

The computational graph serves as an abstraction that defines the computations in the form of a directed graph. Graphs are data structures with nodes or vertices and edges, where a set of vertices are connected in pairs with directed edges. Upon running code in TensorFlow, the definition of the computation graphs is static.

Programmers would have to perform all interactions with the outer world through ‘tf.Placeholder’ and ‘tf.Session’ objects. External data could substitute the two tensors at runtime. The core benefit of computation graphs points to parallelism, also known as dependency-driving scheduling, which ensures faster and more efficient training.

PyTorch also features two core blocks in its working mechanism. The first component focuses on dynamic development of computational graphs. Another component in the architecture of PyTorch is Autograds, which offers automatic differentiation for dynamic graphs. The responses to questions like “Which is faster PyTorch or TensorFlow?” would include references to the dynamic graphs in PyTorch.

The working mechanism of PyTorch involves dynamic changes in graphs, which also execute nodes continuously without special placeholders or session interfaces. Most important of all, the integration of PyTorch with Python makes it easier to familiarize yourself with the deep learning framework. Interestingly, you could also implement dynamic graphs with TensorFlow by leveraging the TensorFlow Fold library.

Curious about what are the impacts of AI on DeFi Space? Check here for a detailed guide on the Potential Impact Of AI On The DeFi Space now!

-

Visualization

The next pointer for comparing PyTorch and TensorFlow points at visualization in the training process. Which framework offers better visualization of the training process? The answer to this question in TensorFlow vs PyTorch comparisons would point you toward TensorFlow.

Visualization is a vital necessity for developers as it helps in tracking the training process alongside ensuring more convenient debugging. TensorFlow features a visualization library known as TensorBoard. PyTorch developers utilize Visdom as their visualization dashboard, albeit with limited and minimalistic features. Therefore, TensorFlow takes the upper hand in visualization of training process.

-

Definition of Simple Neural Networks

The methods for declaring neural networks in PyTorch and TensorFlow are also important points of difference. You can find a better impression of the PyTorch vs TensorFlow speed debate by reflecting on the ease of defining neural networks. How fast can you set up neural networks in PyTorch and TensorFlow?

PyTorch considers a neural network as a class, and you can use the ‘torch.nn’ package to import the essential layers required for building the neural network architecture. You can declare the layers first in the “_init_()” method and define the pathway of input through all network layers by using the “forward()” method. In the final stage, you have to declare the variable model and assign it to the desired architecture.

How does TensorFlow compare to PyTorch in terms of speed of setting up neural networks? TensorFlow has merged Keras into its background and follows a syntax like Keras for declaring layers. The first step involves declaring the variable and assigning it to the desired architecture type. For example, you can declare the variable as “Sequential().” In the next step, you could add layers directly in a sequence through the ‘model.add()’ method. You can import the type of layer by using the ‘tf.layers’ method.

Want to understand the importance of ethics in AI, ethical frameworks, principles, and challenges? Enroll now in Ethics Of Artificial Intelligence (AI) Course

-

Production Deployment

Another significant pointer for comparing TensorFlow and PyTorch refers to production deployment. TensorFlow is an effective choice for deploying trained models in production. You can deploy models directly in TensorFlow through the TensorFlow serving framework, which utilizes the REST Client API.

On the other hand, PyTorch offers easier management of production deployments in the recent stable version. However, it does not offer any framework for deploying models directly on the internet, and you have to rely on third-party frameworks. For example, you would have to rely on Django or Flash as the backend server. Therefore, TensorFlow is an ideal choice in the event of demand for better performance.

-

Distributed Training

The comparison between PyTorch and TensorFlow also draws the limelight toward distributed training. As a matter of fact, the difference between TensorFlow and PyTorch showcases the importance of data parallelism. PyTorch could help in optimizing performance through native support for asynchronous execution available with Python.

On the other hand, you would have to go through the trouble of manual coding and fine-tuning every task on specific devices to enable distributed training. Interestingly, programmers could also replicate everything from PyTorch in TensorFlow, although with some effort.

Aspiring to become a certified AI professional? Read here for a detailed guide on How To Become A Certified AI Professional now!

-

Accuracy

The review of differences between PyTorch and TensorFlow revolves primarily around the concerns of speed and performance. However, you need to move beyond questions like “Which is faster PyTorch or TensorFlow?” to identify the better alternative. The accuracy graphs of PyTorch and TensorFlow are similar to each other, with uniform variation in training accuracy. Both the deep learning frameworks showcase a constant increase in accuracy of training as the models begin memorizing the information used for training.

-

Training Time and Memory Consumption

You can find a better outcome from a PyTorch vs TensorFlow speed comparison by learning about their training time and memory usage. The training time for TensorFlow is significantly higher than PyTorch. TensorFlow has an average training time of 11.19 seconds, while PyTorch has an average training time of 7.67 seconds.

In terms of memory consumption, TensorFlow takes up 1.7 GB of RAM in the training process. On the other hand, PyTorch consumed 3.5 GB of RAM during the training process. However, the variance in memory consumption by the deep learning frameworks at the time of initial data loading is minimal.

Want to learn about the fundamentals of AI and Fintech? Enroll now in AI And Fintech Masterclass

Final Words

The review of the TensorFlow vs Python comparison shows that TensorFlow is a powerful and sophisticated deep learning framework. For example, TensorFlow features extensive visualization capabilities with TensorBoard library. On top of it, TensorFlow also offers options for production-ready deployment alongside offering support for different hardware platforms. On the other side of the comparison, PyTorch is still a new framework and offers the flexibility of integration with Python. Learn more about the features and use cases of the deep learning frameworks before implementing one in your next project.